Introduction

Scache is memory backed persistent storage for preserving session data across page accesses. Scache aims to help transient session data management for complicated and distributed web applications by being network accessible and more fine grained solution than using plain $_SESSION is.

Despite its misnaming, scache is actually persistent storage and it quarantees to contain whole session or expire session as whole on severe error condition. Scache has very small sub-megabyte scale system footprint, still capable on scaling to very large amounts of data on distributed php cluster. Scache positions somewhere between simple cache-only key-value storages and heavy disk backed nosql solutions.

Features

Scache has both class and procedural API to access it's features. The features are :

- Private persistent storage for reliably saving session variables through lifetime of single session.

- Shared persistent storage for storing non-expiring all-client accessible data.

- Shared cache for caching temporal data to be reused by clients.

- Shared Counters to keep track and trigger stored data invalidations

- Ring datatype as tool for implementing work queues and stacks.

Main difference to other similar implementations is scache's evolved tree-like keyspace for referencing data the way like data is referenced on a filesystem. Structured keyspace allows organizing mutually dependent data under same subdirectories thus making complete dataset purges easier. All features above support structured keyspace model.

Preface for session management in clustered environment

While scache has built in sessionhandler emulator for traditional php session handling, it strongly suggests omitting use of $_SESSION altogether, at least if no proper locking is performed.

Using PHP's builtin session manager has so far been easy and worked well on traditional web pages where browser loads complete pages and practically only one server process accesses the session data at the time.

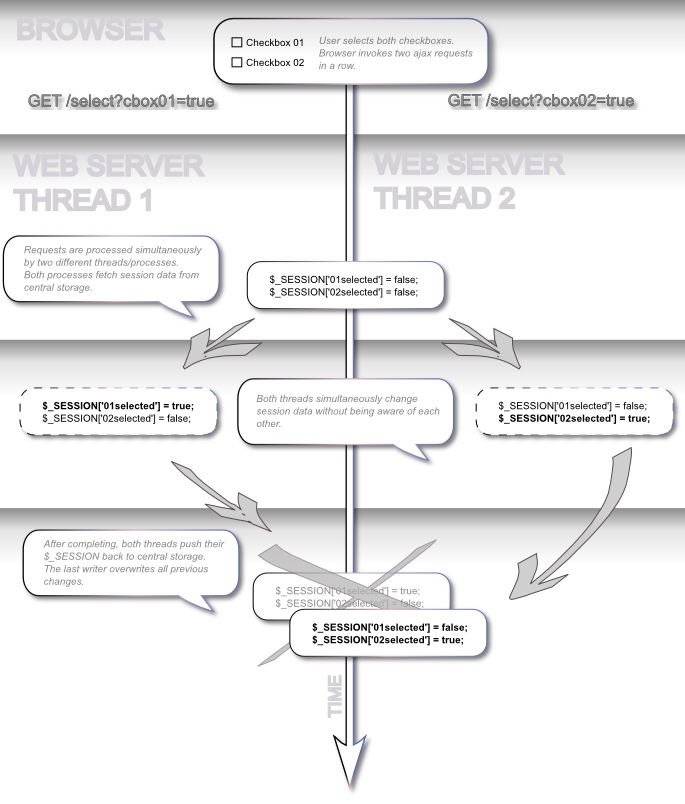

Modern AJAX-based applications instead might very probably invoke several requests at once, leading them in worst case to be processed by different server processes (or in cluster setups even on different physical servers) simultaneously. This creates a race condition where more than one server processes or threads access and modify it's own copy of $_SESSION data simultaneously.

There is of cource some features on browsers that make this race condition not so common. For example clients prefer pipelining requests over keepalive HTTP/1.1 connections that by side effect serialize the requests. Javascript is in principle single threaded process, etc. But generally these countermeasures depend on client working in line with expectations. Not to the design of web application. However, thread safety should be dictated by server application alone, not multitudes of clients working all as expected. One perfectly valid closed HTTP/1.0 connection is enough to break all these browser behaviour depending serialization assumptions.

As a countermeasure for situation above, PHP's built in filesystem based session handler locks and effectively serializes access to session data to one performer at the time - but this can be done only as long as all session accessing processes are run locally inside single server.

In a networked clustered environment, where several separate servers access session data on central location, PHP's internal session locking is not available. There is no common filesystem locks or semaphores that PHP could use across physical servers for mutual coordination.

Reliable session locking on clustered multi-server environment is difficult to implement. Probably most common solution is storing session data to database table and exclude these race conditions by row-level locking session on transaction level to single executor at time. But besides of storing session data to database being very expensive and unscalable thing to do, this approach also unnecessarily serializes requests also on those situations, where there is no shared protection requiring variable to be raced by different requests.

Problem in image above actually is not lack of locking, but that the whole $_SESSION structure is copied to executing threads, changed indepently and written as a whole back to central repository. $_SESSION being basically a single variable, forces it to be copied to executing thread in single entity to be later pushed back either modified or unmodified, possibly overwriting other changes that might have occured in meantime.

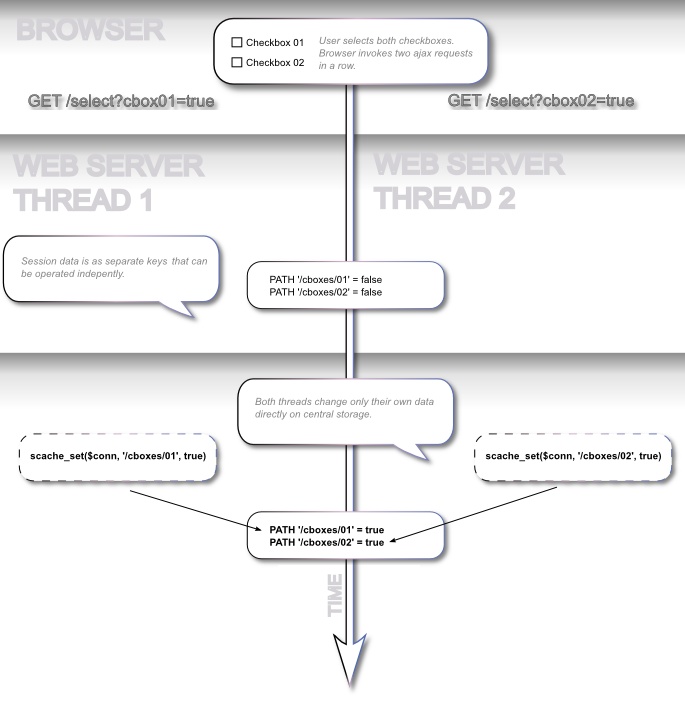

Scache's solution instead is to break that big array to single values and make it possible to operate directly on these variables stored on server instead of copying variables back and forth between executing www server and back end storage.

Scache is designed to give fast flexible api for lockless accessing and modifying single variables assigned for single purpose on central shared repository. Scache tries to make easy for programmer to choose to access just variables needed for current operation, instead of storing batches of data under single key and unnecessarily move it forth and back between server and central repository.

Background and motivation

Scaches roots are somewhere in year 2002 at the dawn on Web 2.0, when systems were slower and hardware was more expensive and there was a need to find good sustainable way to create web application platform, that can be later extended from single stand alone servers to larger multi server clustered environment.

Scaches principles are :

Breaking data to small pieces

At those days hardware performance and network speeds being fraction of what it is today, there were strong need to save clock cycles and bits from wherever they could be extracted.

Important thing was to do the just bare minimum, so one of the scaches design priciples was to give a way to break data to small pieces so that only required parts can be accessed leaving all unnecessary data in place, out of unnecessary unserialization and possible transfer over network.

Scache is internally optimized so that it is capable of storing plenty of single values and it's API allows efficiently accessing and multiple operations on these variables. With versatile API, Scache tries to diminish situations where it's tempting for programmer to copy data array to be operated inside PHP script and pushed back to storage in entity.

By chance, approach chosen those days eases today processing of shared data, as described in session management chapter. Big script-managed data arrays eventually complicate shared data operations when there is multiple processes serving multiple clients. It's strongly encouraged to store data as small chunks, so that simultaneous servers operating on near variables interfere each others as little as possible.

Data persistence

At that time there were some key-value caching solutions, but concept of key-value cache has generally some issues that are bit unsuitable for the hard optimizations being after to.

The main strength and the main drawback in cache is, that it allows values be dropped from cache.

With concept of cache, you can very easily optimize out database queries and use already generated results from caches instead. You can try to fetch data from cache and in case it is not there, get it from database. In other words you can optimize out second step if first one succeeds.

But what about storing of these expensively constructed result sets to be reused? You can push it to cache to be reused by other clients, but caches can drop data any time they want. For example to free system resources.

To save that expensively generated result, you need to push it to cache and also to push it redundantly to some persistent and slower storage, for example database table. With storing to cache, you need to do both steps.

If cache instead would quarantee to store data persistently, you could optimize out that extra in-case-of-failure -backup.

So, one of scache's function is to provide persistent storage. Scache quarantees that everything that is stored under same session id will be there. If there is fatal condition like memory outage, whole session is wiped off so that session client can be built on assumption that either everything is there or there is no session.

Structuring your data

Second issue with key-value storage is data organization.

Modular gradually growing web application and nonmodular key-value storage's flat keyspace dont play very well together. To reduce namespace pollution, modularity needs to be in some way emulated also on that flat keyspace, most usually by systematic key prefixing.

Despite their slight overhead, prefixed keys work just well for storing the data on caching only solution. But when running persistent storage, you also need to take in account how to get rid of obsoleted data. Of course with suitably prefixed keys you can inefficiently enumerate through all keys and delete them by selected prefix, but that sounds bit like enumerating through all files on your hard disk and selectively delete some of them one by one.

Filesystem's answer to the problem is subdirectories, to where you can group all mutually dependent files. By single more or less accidental hit of delete key on parent folder, you can get completely rid all of them at once.

Same possibility would be nice on key-value storage also, so scache implements structured tree-like keyspace to provide that.

By directory-modelled keyspace you can assing prefixes and subprefixes to you wep app modules/functionality, and keyspace can grow freely on par adding more modules to your web application. Scaches trees are very lightweight nested balanced binary trees with practically no performance differences in storing same number of keys nested than to flat keyspace.

Inter client communication

Third issues with data persistence is requirement to detect when data has become obsolete.

In scache, helpers for that are counters. Counters are atomic integers shared between all session clients with common namespace. With counters you can emulate tags on session data, and by detecting changes in values of these tags, detect also the need to refresh data.

For example for detecting valid cached groups memberships data, one need to keep track whether users has deleted or modified, groups modified or deleted or memberships changed.

$path = "/groups/gid-$gid/members";

list($userserial, $groupserial, $memberserial, $data) =

scache_iov(($sess = session()),

Array(Array(SCIOP_VGET, '/c/userserial'),

Array(SCIOP_VGET, '/c/groupserial'),

Array(SCIOP_VGET. '/c/memberserial'),

Array(SCIOP_CHGET, $path)));

if ($data &&

($data['userial'] === $userserial) &&

($data['gserial'] === $groupserial) &&

($data['mserial'] === $memberserial)) {

/* cache-hit */

return $data['actualdata'];

} else {

/* cache-miss, get from db and store */

$data = waste_clockcycles_for_getting_members_from_slow_database($gid);

scache_chput($sess, $path,

Array('userial' => $userserial,

'gserial' => $groupserial,

'mserial' => $memberserial,

'actualdata' => $data));

return $data;

}

In example above all you need to do in elsewhere is to increment counters by :

if (update_user_etime_succeed())

scache_vadd(session(), '/c/userserial', 1);

On other words data is tagged with all dependencies it has while modules updating their own dependency counters.

Generally counters, shared storage and rings are utilites for passing messages and data between connecting session clients.

Performance and technology

Scached server is single threaded lightweight daemon process. Having file size below 100 kBytes and startup memory consumption below half megabytes, scached is suitable also for small embedded systems.

Scache stores data as reference counted fixed size chunks to nested red-black balanced binary trees with some optimization on not rebalancing on data deletions. Scache avoid hard kernel calls, does its I/O-loop's operations on stack or re-used preallocated memchunks, and is internally designed to avoid very aggressively in-memory copying and memory allocations. For example multiple query operations by multiple simultaneous queriers pass optimally without any memory allocations and in-mem copying.

Peformance tests measuring raw backend power on locally installed server can easily break million operations per second on modern hardware.

When accessed over network, speed towards single client slows down drastically because of inevitable network latencies. Server can still support multitude of clients at same cumulative speed thought network delays bring down lot from single individual client's request rates.

Scached does not and will not support storing data on disk. Scached is memory backed only, so you need to take that on account when sizing memory consumption and storing valuable data. At least have enough swap space available.

So...

That's all for introduction for now. Please see tutorial and API documentation for more information. Until I get some time to write commenting system, you can disagree with me by mailing me at scache@nanona.fi... :-)